The Math

The notion of “a tensor” is commonly defined in two different ways. The first definition goes roughly like this (“roughly” means “do not tell this to your math teacher”):

A tensor is a geometrical object that can be represented in some coordinate system as an n-dimensional array (it is not the array itself). The quantities in that array depend on the coordinate system in which the representation is done, however there is a precise rule on how these quantities change if we switch to another coordinate system. It is this rule that defines what a tensor (and in particular a vector or a 1-form) is.

The other definition, less used by physicists and more used by mathematicians is (again roughly) the following:

A tensor is the sum of tensor products of forms and vectors. Forms and vectors are themselves given nice geometrical definitions.

It is this second definition that is used in the diffgeom SymPy module. Naturally, we need to define “tensor product”, “vector” and “form” in order to use this definition. Indeed, the structure of the module follows closely these mathematical definitions.

Disclaimer: I have used and will use the words tensor and tensor field interchangeably. In order for this post to make any sense to you, ensure that you understand the difference and are able to find out the exact meaning from the context. The same goes also for vector / vector field and form / form field.

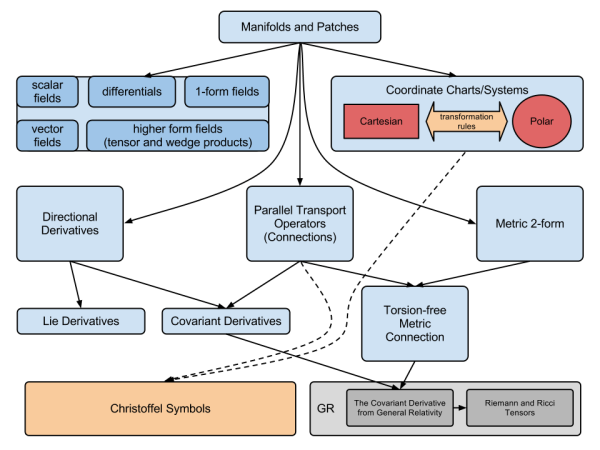

The Implementation

To create the mathematical structure of differential geometry or its implementation in a CAS like SymPy we need to build up the ladder of object definitions. Each new and more interesting notion will depend on the definition of the previous. Hence we start with the boilerplate object “Manifold” and on it we define a “Patch” (the diffgeom module implements all this boilerplate for commonly used manifold, however in order to explain how it works, we will redo everything from scratch):

m = Manifold('my_manifold', 2) # A 2D manifold called 'my_manifold'

p = Patch('my_patch', m) # A patch called 'my_patch'

The first object that does something marginally interesting is the “Coordinate System”. Its role is to permit the parametrization of points on the patch by a tuple of numbers:

cs_r = CoordSystem('R', p) # A coordinate system called 'R' (for rectangular)

point = cs_r.point([1,1]) # A point with coordinates (1, 1)

If you have two or more coordinate systems you can tell the computer how to transform a tuple of numbers from one system to another:

cs_p = CoordSystem('P', p)

cs_r.connect_to(cs_p, [x, y], [sqrt(x**2+y**2), atan2(y,x)])

cs_p.connect_to(cs_r, [r, t], [r*cos(t), r*sin(t)], inverse=False)

# Now the point instances know how to transform their coordinate tuples:

point.coords(cs_p)

# output:

#⎡ ___⎤

#⎢╲╱ 2 ⎥

#⎢ ⎥

#⎢ π ⎥

#⎢ ─ ⎥

#⎣ 4 ⎦

However, differential geometry is not about calculating coordinates of points in different systems. It is about working with fields. Thus, we will focus on a single coordinate system from now on, and to be explicit about its complete independence of whether we want rectangular or other coordinates, we will just call it ‘c’ and leave it unconnected to other coordinate systems.

c = CoordSystem('c', p)

Scalar Field

We continue to build up our ladder of definitions in order to obtain a more interesting and useful theory/implementation. The next step is the “scalar field”. A scalar field is a mapping from the manifold (the set of points) to the real numbers (yes, just reals (maybe complex), the rest brings unnecessary complexity). Each coordinate system brings with itself the basic scalar fields (i.e. coordinate functions), that correspond to the mappings from a point to an element of its coordinate tuple. These basic scalar fields are implemented internally as BaseScalarField instances (this is however invisible to the user).

c.coord_functions()

# output: [c₀, c₁]

point = c.point([a, b])

c.coord_function(0)(point)

# output: a

You can build more complicated fields by using the base scalar fields. This does not produce an instance of some new ScalarField class. The BaseScalarField instances just become a part of the expression tree of an ordinary Expr instance (the base for building expressions in SymPy).

c0, c1 = c.coord_functions()

field = f(c0, 3*c1)/sin(c0)*c1**2

f

# output:

# -1 2

#f(c₀, 3⋅c₁)⋅sin (c₀)⋅c₁

field(point)

# output:

# 2

#b ⋅f(a, 3⋅b)

#────────────

# sin(a)

Vector Field

Then comes the vector field which is defined as an element of the set of differential operators over the scalar fields. All elements of this set can be build up as linear combinations of base vector fields. The base vector fields correspond to the partial derivatives with respect to the base scalar fields. They are implemented in the BaseVectorField class, which also is invisible to the user.

c.base_vectors()

# output: [∂_c_0, ∂_c_1]

e_c0, e_c1 = c.base_vectors()

e_c0(c0)

# output: 1

e_c0(c1)

# output: 0

One can also use more complicated fields (again, no need for new VectorField class, just being part of the expression tree of Expr):

v_field = c1*e_c0 + f(c0)*e_c1

v_field

# output: f(c₀)⋅∂_c_1 + c₁⋅∂_c_0

s_field = g(c0, c1)

v_field(s_field)

# output:

# ⎛ d ⎞│ ⎛ d ⎞│

#f(c₀)⋅⎜───(g(c₀, ξ₂))⎟│ + c₁⋅⎜───(g(ξ₁, c₁))⎟│

# ⎝dξ₂ ⎠│ξ₂=c₁ ⎝dξ₁ ⎠│ξ₁=c₀

v_field(s_field)(point)

# output:

# ⎛ d ⎞│ ⎛ d ⎞│

#b⋅⎜───(g(ξ₁, b))⎟│ + f(a)⋅⎜───(g(a, ξ₂))⎟│

# ⎝dξ₁ ⎠│ξ₁=a ⎝dξ₂ ⎠│ξ₂=b

1-Form Field and Differential

The final ingredient needed for the basis of differential geometry is the 1-form field. A 1-form field is a linear mapping from the set of vector fields to the set of reals. The interesting thing is that all 1-forms can be build-up from linear combinations of the differentials of the base scalar fields.

There is the need to define what a differential is. The differential  of the scalar field

of the scalar field  is the 1-form field which has the following property: for every vector field

is the 1-form field which has the following property: for every vector field  one has

one has  .

.

In the diffgeom module the differential is implemented as the Differential class. The differentials of the base scalar fields are accessible with the base_oneforms() method, however one can construct the differential of whatever scalar field they wish. There is, as always, no dedicated OneFormField class. Everything is build up with Expr instances.

c.base_oneforms()

# output: [ⅆ c₀, ⅆ c₁]

dc0, dc1 = c.base_oneforms()

dc0(e_c0), dc1(e_c0)

# output: (1, 0)

And building up more complicated expressions:

f_field = g(c0)*dc1 + 2*dc0

f_field(v_field)

# output: g(c₀)⋅f(c₀) + 2⋅c₁

The Rest

Now that we have the basic building blocks in order to construct higher order tensors we use tensor and wedge products. More about them in part 2.

What if I Need to Work with a Number of Different Coordinate Systems

The only difference is that the chain rule of differentiation will be necessary. One can express the same statement in a more implementation independent way as “The rule for transformation of coordinates will need to be applied”. Anyhow, it works:

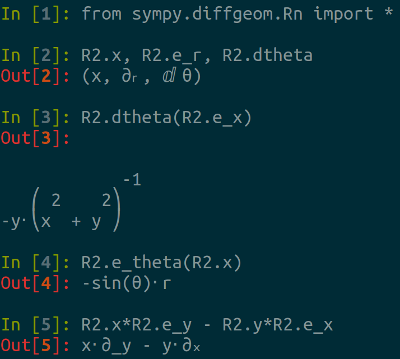

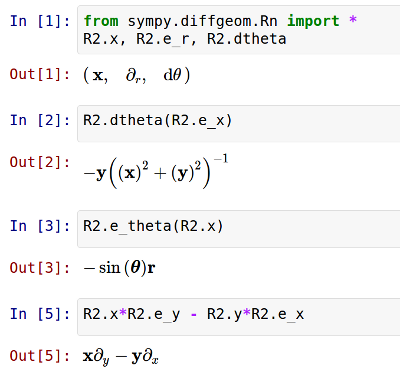

Examples from the already implemented  module.

module.

Points Defined in one Coordinate System and Evaluated in Another

point_r = R2_r.point([x0, y0])

point_p = R2_p.point([r0, theta0])

R2.x(point_r)

# output: x₀

R2.x(point_p)

# output: r₀⋅cos(θ₀)

trigsimp((R2.x**2 + R2.y**2 + g(R2.theta))(point_p))

# output:

# 2

#r₀ + g(θ₀)

Vector Fields Operating on Scalar Fields

R2.e_x(R2.theta)

# output:

# -1

# ⎛ 2 2⎞

#-y⋅⎝x + y ⎠

R2.e_theta(R2.y)

# output: cos(θ)⋅r

1-Form Fields Operating on Vector Fields

R2.dr(R2.e_x)

# output:

# -1/2

#⎛ 2 2⎞

#⎝x + y ⎠ ⋅x

What if I Need an Unspecified Arbitrary Coordinate System

If it is just one coordinate system, nothing; all the examples in the first part were for a completely arbitrary system. If you want two systems with an arbitrary transformation rules between them, just use an arbitrary function when you connect them:

m = Manifold('my_manifold', 2)

p = Patch('my_patch', m)

cs_a = CoordSystem('a', p)

cs_b = CoordSystem('b', p)

cs_a.connect_to(cs_b, [x, y], [f(x,y), g(x,y)], inverse=False)

cs_a.base_vector(1)(cs_b.coord_function(0))

# output:

#⎛ d ⎞│

#⎜───(f(a₀, ξ₁))⎟│

#⎝dξ₁ ⎠│ξ₁=a₁

The diffgeom module is based in its entirety on the work of Gerald Jay Sussman and Jack Wisdom on “Functional Differential Geometry”. The only substantial difference (in what is already implemented) is how the diffgeom module treats operations on fields. Both the diffgeom module and the original Scheme code behave like this:

scalar_field(point) ---> an expression representing a real number

However, the original scheme implementation and the SymPy module behave differently in these cases:

vector_field(scalar_field)

form_field(vector_field)

As far as I know, the original Scheme code produces an opaque object. It indeed represents a scalar field, however  will not produce directly

will not produce directly  . Instead it produces an object that returns 1 when evaluated at a point. The diffgeom module does this evaluation at the time at which one calls

. Instead it produces an object that returns 1 when evaluated at a point. The diffgeom module does this evaluation at the time at which one calls  without the need to evaluate at a point, thus the result is explicit and not encapsulated in an opaque object.

without the need to evaluate at a point, thus the result is explicit and not encapsulated in an opaque object.

0.000000

0.000000