During the recent GSoC summit I had the chance to participate in many fascinating discussions. One such occasion was while meeting the Sage representative.

A detail he mentioned, was that during his tests SymPy frequently failed to solve integrals that Sage (using Maxima) was able to solve. An explanation, in which I like to believe, would be that he was testing an old version of SymPy lacking the new integration routines implemented during the last few GSoC projects. Hence my decision the compare the most recent versions of both projects.

The tested versions are SymPy 0.7.2 and Sage 5.3.

Depending on screen size and wordpress theme the table might be badly formatted so here is a link to the wiki html page and a pdf version.

It should be noted that Sage is more rigorous about the assumptions on its symbols and so it fails to integrate something like if

is not explicitly different than -1. I personally think that this is a feature and not a bug. Due to this difference however the script used to test Sage differs from the one used for SymPy.

Another minor methodological difference in the tests is the fact that the timeout pattern that I used failed to work for the Sage interpreter. Hence, SymPy integration timeouts at about 120 seconds while Sage integration is manually interrupted when it takes too much time.

Final methodological difference is that I purge the SymPy cache between each integral as otherwise the RAM usage becomes too great.

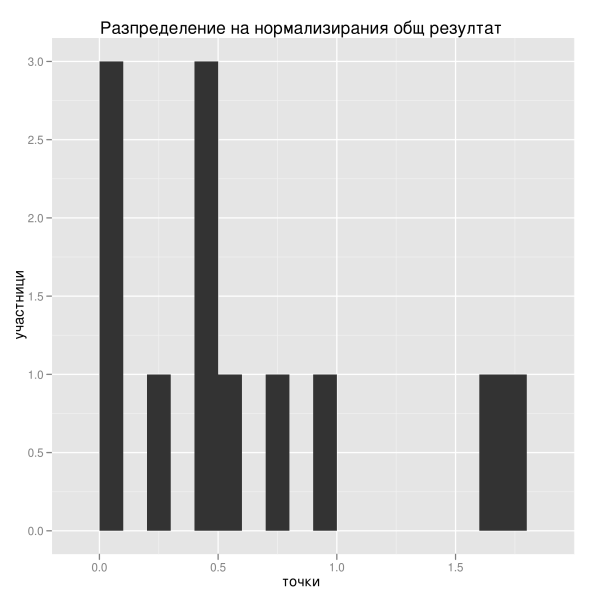

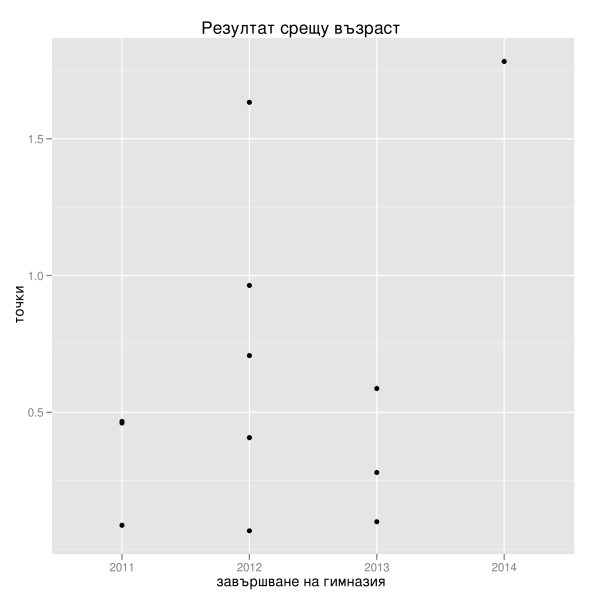

The results show that SymPy is slightly better in using special functions to solve integrals, but there are also a few integrals that Sage solves while SymPy fails to do so. On few occasions Sage fails disgracefully, meaning that it returns an error instead of unevaluated integral. When both packages fail to evaluate the integral SymPy is much slower to say so (timeout for SymPy compared to 1 or 2 seconds for Sage to return an unevaluated integral). Finally, on some occasions the results by Sage seem better simplified.

Integrals solved better by SymPy (if you consider special functions “better”):

with the use of a special function while Sage returns unevaluated integrals

with the use of a special function while Sage returns unevaluated integrals

with the use of a special function while Sage returns unevaluated integrals

with the use of a special function while Sage returns unevaluated integrals

Integrals solved better by Sage:

solved by both but Sage’s result is simpler (it uses arctan instead of log)

SymPy fails this simple integral

solved by both but Sage’s result is much simpler

SymPy fails

SymPy fails

SymPy fails

SymPy fails

The code for the SymPy tests:

import signal

from time import clock

from sympy import *

from sympy.core.cache import clear_cache

class TimeoutException(Exception):

pass

def timeout_handler(signum, frame):

raise TimeoutException()

a, b, x = symbols('a b x')

n, m = symbols('n m', integer=True)

integrants = [

x,

a*x**n,

a*x**n + 1,

a*x**b + 1,

1/x,

1/(x + 1),

1/(x**2 + 1),

1/(x**3 + 1),

1/(a*x**n),

1/(a*x**n + 1),

1/(a*x**b + 1),

a*x**2/(b*x**2 + 1),

a*x**3/(b*x**3 + 1),

a*x**n/(b*x**m + 1),

(a*x**2 + 1)/(b*x**2 + 1),

(a*x**3 + 1)/(b*x**3 + 1),

(a*x**n + 1)/(b*x**m + 1),

(a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1),

sqrt(1/x),

sqrt(1/(x + 1)),

sqrt(1/(x**2 + 1)),

sqrt(1/(x**3 + 1)),

sqrt(1/(a*x**n)),

sqrt(1/(a*x**n + 1)),

sqrt(1/(a*x**b + 1)),

sqrt(a*x**2/(b*x**2 + 1)),

sqrt(a*x**3/(b*x**3 + 1)),

sqrt(a*x**n/(b*x**m + 1)),

sqrt((a*x**2 + 1)/(b*x**2 + 1)),

sqrt((a*x**3 + 1)/(b*x**3 + 1)),

sqrt((a*x**n + 1)/(b*x**m + 1)),

sqrt((a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1)),

log(x),

log(1/x),

log(1/(x + 1)),

log(1/(x**2 + 1)),

log(1/(x**3 + 1)),

log(1/(a*x**n)),

log(1/(a*x**n + 1)),

log(1/(a*x**b + 1)),

log(a*x**2/(b*x**2 + 1)),

log(a*x**3/(b*x**3 + 1)),

log(a*x**n/(b*x**m + 1)),

log((a*x**2 + 1)/(b*x**2 + 1)),

log((a*x**3 + 1)/(b*x**3 + 1)),

log((a*x**n + 1)/(b*x**m + 1)),

log((a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1)),

sin(x),

sin(x)**n*cos(x)**m,

sin(a*x)**n*cos(b*x)**m,

1/sin(x),

1/(sin(x) + 1),

1/(sin(x)**2 + 1),

1/(sin(x)**3 + 1),

1/(a*sin(x)**n),

1/(a*sin(x)**n + 1),

1/(a*sin(x)**b + 1),

a*sin(x)**2/(b*sin(x)**2 + 1),

a*sin(x)**3/(b*sin(x)**3 + 1),

a*sin(x)**n/(b*sin(x)**m + 1),

(a*sin(x)**2 + 1)/(b*sin(x)**2 + 1),

(a*sin(x)**3 + 1)/(b*sin(x)**3 + 1),

(a*sin(x)**n + 1)/(b*sin(x)**m + 1),

(a*sin(x)**5 + sin(x)**3 + 1)/(b*sin(x)**5 + sin(x)**3 + 1),

]

integrated = []

durations = []

f_integrants = open('dump_integrants', 'w')

f_integrated = open('dump_integrated', 'w')

f_durations = open('dump_duration', 'w')

for index, integrant in enumerate(integrants):

clear_cache()

print '====================================='

print index, ' of ', len(integrants)

print '###', integrant

start = clock()

try:

old_handler = signal.signal(signal.SIGALRM, timeout_handler)

signal.alarm(120)

integrated.append(integrate(integrant, x))

signal.alarm(0)

except TimeoutException:

integrated.append(TimeoutException)

finally:

signal.signal(signal.SIGALRM, old_handler)

durations.append(clock() - start)

print '###', integrated[-1]

print 'in %f seconds'%durations[-1]

f_integrants.write(str(integrant))

f_integrated.write(str(integrated[-1]))

f_durations.write(str(durations[-1]))

f_integrants.write('\n')

f_integrated.write('\n')

f_durations.write('\n')

And for Sage:

import signal

from time import clock

from sage.all import *

from sage.symbolic.integration.integral import indefinite_integral

class TimeoutException(Exception):

pass

def timeout_handler(signum, frame):

raise TimeoutException()

a, b, x = var('a b x')

n, m = var('n m')

assume(n, 'integer')

assume(m, 'integer')

assume(n != 1)

assume(n != -1)

assume(n != 2)

assume(n>0)

assume(b != 1)

assume(b != -1)

assume(b>0)

assume(a>0)

integrants = [

x,

a*x**n,

a*x**n + 1,

a*x**b + 1,

1/x,

1/(x + 1),

1/(x**2 + 1),

1/(x**3 + 1),

1/(a*x**n),

1/(a*x**n + 1),

1/(a*x**b + 1),

a*x**2/(b*x**2 + 1),

a*x**3/(b*x**3 + 1),

a*x**n/(b*x**m + 1),

(a*x**2 + 1)/(b*x**2 + 1),

(a*x**3 + 1)/(b*x**3 + 1),

(a*x**n + 1)/(b*x**m + 1),

(a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1),

sqrt(1/x),

sqrt(1/(x + 1)),

sqrt(1/(x**2 + 1)),

sqrt(1/(x**3 + 1)),

sqrt(1/(a*x**n)),

sqrt(1/(a*x**n + 1)),

sqrt(1/(a*x**b + 1)),

sqrt(a*x**2/(b*x**2 + 1)),

sqrt(a*x**3/(b*x**3 + 1)),

sqrt(a*x**n/(b*x**m + 1)),

sqrt((a*x**2 + 1)/(b*x**2 + 1)),

sqrt((a*x**3 + 1)/(b*x**3 + 1)),

sqrt((a*x**n + 1)/(b*x**m + 1)),

sqrt((a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1)),

log(x),

log(1/x),

log(1/(x + 1)),

log(1/(x**2 + 1)),

log(1/(x**3 + 1)),

log(1/(a*x**n)),

log(1/(a*x**n + 1)),

log(1/(a*x**b + 1)),

log(a*x**2/(b*x**2 + 1)),

log(a*x**3/(b*x**3 + 1)),

log(a*x**n/(b*x**m + 1)),

log((a*x**2 + 1)/(b*x**2 + 1)),

log((a*x**3 + 1)/(b*x**3 + 1)),

log((a*x**n + 1)/(b*x**m + 1)),

log((a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1)),

sin(x),

sin(x)**n*cos(x)**m,

sin(a*x)**n*cos(b*x)**m,

1/sin(x),

1/(sin(x) + 1),

1/(sin(x)**2 + 1),

1/(sin(x)**3 + 1),

1/(a*sin(x)**n),

1/(a*sin(x)**n + 1),

1/(a*sin(x)**b + 1),

a*sin(x)**2/(b*sin(x)**2 + 1),

a*sin(x)**3/(b*sin(x)**3 + 1),

a*sin(x)**n/(b*sin(x)**m + 1),

(a*sin(x)**2 + 1)/(b*sin(x)**2 + 1),

(a*sin(x)**3 + 1)/(b*sin(x)**3 + 1),

(a*sin(x)**n + 1)/(b*sin(x)**m + 1),

(a*sin(x)**5 + sin(x)**3 + 1)/(b*sin(x)**5 + sin(x)**3 + 1),

]

integrated = []

durations = []

f_integrants = open('dump_integrants', 'w')

f_integrated = open('dump_integrated', 'w')

f_durations = open('dump_duration', 'w')

for index, integrant in enumerate(integrants):

print '====================================='

print index, ' of ', len(integrants)

print '###', integrant

start = clock()

try:

old_handler = signal.signal(signal.SIGALRM, timeout_handler)

signal.alarm(120)

integrated.append(indefinite_integral(integrant, x))

signal.alarm(0)

except Exception, e:

integrated.append(e)

finally:

signal.signal(signal.SIGALRM, old_handler)

durations.append(clock() - start)

print '###', integrated[-1]

print 'in %f seconds'%durations[-1]

f_integrants.write(str(integrant))

f_integrated.write(str(integrated[-1]))

f_durations.write(str(durations[-1]))

f_integrants.write('\n')

f_integrated.write('\n')

f_durations.write('\n')

Below is the complete table (available as a wiki html page and a pdf).

| Legend: | |||||

| Disgraceful failure | |||||

| Timeout or manual interupt | |||||

| Return an unevalued integral | |||||

| Asking for assumptions | |||||

| Solution with fancy special functions | |||||

| Integrant | Sympy `integrate` | Sage `indefinite_integral` | Comments | ||

| Result | cpu time | Result | cpu time | ||

| x | x**2/2 | 0 | 1/2*x^2 | 0 | |

| a*x**n | a*x**(n + 1)/(n + 1) | 0 | a*x^(n + 1)/(n + 1) | 0 | Sage asks whether the denominator is zero before solving. |

| a*x**n + 1 | a*x**(n + 1)/(n + 1) + x | 0 | a*x^(n + 1)/(n + 1) + x | 0 | Sage asks whether the denominator is zero before solving. |

| a*x**b + 1 | a*x**(b + 1)/(b + 1) + x | 0 | a*x^(b + 1)/(b + 1) + x | 0 | Sage asks whether the denominator is zero before solving. |

| 1/x | log(x) | 0 | log(x) | 0 | |

| 1/(x + 1) | log(x + 1) | 0 | log(x + 1) | 0 | |

| 1/(x**2 + 1) | atan(x) | 0 | arctan(x) | 0 | |

| 1/(x**3 + 1) | log(x + 1)/3 – log(x**2 – x + 1)/6 + sqrt(3)*atan(2*sqrt(3)*x/3 – sqrt(3)/3)/3 | 0 | 1/3*sqrt(3)*arctan(1/3*(2*x – 1)*sqrt(3)) + 1/3*log(x + 1) – 1/6*log(x^2 – x + 1) | 0 | |

| x**(-n)/a | x**(-n + 1)/(a*(-n + 1)) | 0 | -x^(-n + 1)/((n – 1)*a) | 0 | Sage asks whether the denominator is zero before solving. |

| 1/(a*x**n + 1) | x*gamma(1/n)*lerchphi(a*x**n*exp_polar(I*pi), 1, 1/n)/(n**2*gamma(1 + 1/n)) | 2 | integrate(1/(x^n*a + 1), x) | 1 | Sympy solves with special functions an integral that Sage cannot solve. |

| 1/(a*x**b + 1) | x*gamma(1/b)*lerchphi(a*x**b*exp_polar(I*pi), 1, 1/b)/(b**2*gamma(1 + 1/b)) | 1 | integrate(1/(x^b*a + 1), x) | 1 | Sympy solves with special functions an integral that Sage cannot solve. |

| a*x**2/(b*x**2 + 1) | a*(sqrt(-1/b**3)*log(-b*sqrt(-1/b**3) + x)/2 – sqrt(-1/b**3)*log(b*sqrt(-1/b**3) + x)/2 + x/b) | 0 | (x/b – arctan(sqrt(b)*x)/b^(3/2))*a | 0 | |

| a*x**3/(b*x**3 + 1) | a*(RootSum(_t**3 + 1/(27*b**4), Lambda(_t, _t*log(-3*_t*b + x))) + x/b) | 0 | -1/6*(2*sqrt(3)*arctan(1/3*(2*b^(2/3)*x – b^(1/3))*sqrt(3)/b^(1/3))/b^(4/3) – 6*x/b – log(b^(2/3)*x^2 – b^(1/3)*x + 1)/b^(4/3) + 2*log((b^(1/3)*x + 1)/b^(1/3))/b^(4/3))*a | 0 | Interesting examples deserving more study as Sympy uses the sum of the roots of a high order polynomial while Sage uses elementary special functions. |

| a*x**n/(b*x**m + 1) | a*(n*x*x**n*gamma(n/m + 1/m)*lerchphi(b*x**m*exp_polar(I*pi), 1, n/m + 1/m)/(m**2*gamma(1 + n/m + 1/m)) + x*x**n*gamma(n/m + 1/m)*lerchphi(b*x**m*exp_polar(I*pi), 1, n/m + 1/m)/(m**2*gamma(1 + n/m + 1/m))) | 3 | (m*integrate(x^n/((m – n – 1)*b^2*x^(2*m) + 2*(m – n – 1)*x^m*b + m – n – 1), x) – x^(n + 1)/((m – n – 1)*x^m*b + m – n – 1))*a | 0 | Sympy solves with special functions an integral that Sage cannot solve. |

| (a*x**2 + 1)/(b*x**2 + 1) | a*x/b + sqrt((-a**2 + 2*a*b – b**2)/b**3)*log(-b*sqrt((-a**2 + 2*a*b – b**2)/b**3)/(a – b) + x)/2 – sqrt((-a**2 + 2*a*b – b**2)/b**3)*log(b*sqrt((-a**2 + 2*a*b – b**2)/b**3)/(a – b) + x)/2 | 0 | a*x/b – (a – b)*arctan(sqrt(b)*x)/b^(3/2) | 0 | Sage symplifies better (log-to-trig formulas). |

| (a*x**3 + 1)/(b*x**3 + 1) | a*x/b + RootSum(_t**3 + (a**3 – 3*a**2*b + 3*a*b**2 – b**3)/(27*b**4), Lambda(_t, _t*log(-3*_t*b/(a – b) + x))) | 0 | a*x/b – 1/3*(a*b – b^2)*sqrt(3)*arctan(1/3*(2*b^(2/3)*x – b^(1/3))*sqrt(3)/b^(1/3))/b^(7/3) + 1/6*(a*b^(2/3) – b^(5/3))*log(b^(2/3)*x^2 – b^(1/3)*x + 1)/b^2 – 1/3*(a*b^(2/3) – b^(5/3))*log((b^(1/3)*x + 1)/b^(1/3))/b^2 | 0 | Interesting examples deserving more study as Sympy uses the sum of the roots of a high order polynomial while Sage uses elementary special functions. |

| (a*x**n + 1)/(b*x**m + 1) | a*(n*x*x**n*gamma(n/m + 1/m)*lerchphi(b*x**m*exp_polar(I*pi), 1, n/m + 1/m)/(m**2*gamma(1 + n/m + 1/m)) + x*x**n*gamma(n/m + 1/m)*lerchphi(b*x**m*exp_polar(I*pi), 1, n/m + 1/m)/(m**2*gamma(1 + n/m + 1/m))) + x*gamma(1/m)*lerchphi(b*x**m*exp_polar(I*pi), 1, 1/m)/(m**2*gamma(1 + 1/m)) | 5 | a*m*integrate(x^n/((m – n – 1)*b^2*x^(2*m) + 2*(m – n – 1)*x^m*b + m – n – 1), x) – a*x^(n + 1)/((m – n – 1)*x^m*b + m – n – 1) + integrate(1/(x^m*b + 1), x) | 1 | Sympy solves with special functions an integral that Sage cannot solve. |

| (a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1) | a*x/b + RootSum(_t**5 + _t**3*(500*a**2*b**3 + 27*a**2 – 1000*a*b**4 – 54*a*b + 500*b**5 + 27*b**2)/(3125*b**6 + 108*b**3) + _t**2*(27*a**3 – 81*a**2*b + 81*a*b**2 – 27*b**3)/(3125*b**6 + 108*b**3) + _t*(9*a**4 – 36*a**3*b + 54*a**2*b**2 – 36*a*b**3 + 9*b**4)/(3125*b**6 + 108*b**3) + (a**5 – 5*a**4*b + 10*a**3*b**2 – 10*a**2*b**3 + 5*a*b**4 – b**5)/(3125*b**6 + 108*b**3), Lambda(_t, _t*log(x + (3662109375*_t**4*b**12 + 3986718750*_t**4*b**9 + 242757000*_t**4*b**6 + 3779136*_t**4*b**3 – 1054687500*_t**3*a*b**9 – 72900000*_t**3*a*b**6 – 1259712*_t**3*a*b**3 + 1054687500*_t**3*b**10 + 72900000*_t**3*b**7 + 1259712*_t**3*b**4 + 410156250*_t**2*a**2*b**9 + 655340625*_t**2*a**2*b**6 + 51267654*_t**2*a**2*b**3 + 944784*_t**2*a**2 – 820312500*_t**2*a*b**10 – 1310681250*_t**2*a*b**7 – 102535308*_t**2*a*b**4 – 1889568*_t**2*a*b + 410156250*_t**2*b**11 + 655340625*_t**2*b**8 + 51267654*_t**2*b**5 + 944784*_t**2*b**2 – 48828125*_t*a**3*b**9 – 186046875*_t*a**3*b**6 + 16774290*_t*a**3*b**3 + 629856*_t*a**3 + 146484375*_t*a**2*b**10 + 558140625*_t*a**2*b**7 – 50322870*_t*a**2*b**4 – 1889568*_t*a**2*b – 146484375*_t*a*b**11 – 558140625*_t*a*b**8 + 50322870*_t*a*b**5 + 1889568*_t*a*b**2 + 48828125*_t*b**12 + 186046875*_t*b**9 – 16774290*_t*b**6 – 629856*_t*b**3 – 2812500*a**4*b**6 + 3596400*a**4*b**3 + 104976*a**4 + 11250000*a**3*b**7 – 14385600*a**3*b**4 – 419904*a**3*b – 16875000*a**2*b**8 + 21578400*a**2*b**5 + 629856*a**2*b**2 + 11250000*a*b**9 – 14385600*a*b**6 – 419904*a*b**3 – 2812500*b**10 + 3596400*b**7 + 104976*b**4)/(9765625*a**4*b**8 + 26493750*a**4*b**5 + 746496*a**4*b**2 – 39062500*a**3*b**9 – 105975000*a**3*b**6 – 2985984*a**3*b**3 + 58593750*a**2*b**10 + 158962500*a**2*b**7 + 4478976*a**2*b**4 – 39062500*a*b**11 – 105975000*a*b**8 – 2985984*a*b**5 + 9765625*b**12 + 26493750*b**9 + 746496*b**6)))) | 106 | -(a – b)*integrate((x^3 + 1)/(b*x^5 + x^3 + 1), x)/b + a*x/b | 0 | Sympy solves with special functions an integral that Sage cannot solve. |

| sqrt(1/x) | 2*x*sqrt(1/x) | 0 | 2*x*sqrt(1/x) | 0 | |

| sqrt(1/(x + 1)) | 2*x*sqrt(1/(x + 1)) + 2*sqrt(1/(x + 1)) | 0 | 2/sqrt(1/(x + 1)) | 0 | |

| sqrt(1/(x**2 + 1)) | Integral(sqrt(1/(x**2 + 1)), x) | 0 | arcsinh(x) | 0 | Sympy cannot solve this simple integral while Sage can. |

| sqrt(1/(x**3 + 1)) | Integral(sqrt(1/(x**3 + 1)), x) | 3 | integrate(sqrt(1/(x^3 + 1)), x) | 0 | |

| sqrt(x**(-n)/a) | -2*x*sqrt(1/a)*sqrt(x**(-n))/(n – 2) | 0 | -2*x*sqrt(x^(-n)/a)/(n-2) | 0 | Sage asks whether the denominator is zero before solving. |

| sqrt(1/(a*x**n + 1)) | Integral(sqrt(1/(a*x**n + 1)), x) | 29 | integrate(sqrt(1/(x^n*a + 1)), x) | 0 | When both Sage and Sympy fail, Sage is quicker. |

| sqrt(1/(a*x**b + 1)) | Integral(sqrt(1/(a*x**b + 1)), x) | 35 | integrate(sqrt(1/(x^b*a + 1)), x) | 1 | When both Sage and Sympy fail, Sage is quicker. |

| sqrt(a*x**2/(b*x**2 + 1)) | sqrt(a)*x*sqrt(x**2)*sqrt(1/(b*x**2 + 1)) + sqrt(a)*sqrt(x**2)*sqrt(1/(b*x**2 + 1))/(b*x) | 2 | (sqrt(a)*b*x^2 + sqrt(a))/(sqrt(b*x^2 + 1)*b) | 0 | |

| sqrt(a*x**3/(b*x**3 + 1)) | Integral(sqrt(a*x**3/(b*x**3 + 1)), x) | 7 | integrate(sqrt(a*x^3/(b*x^3 + 1)), x) | 0 | |

| sqrt(a*x**n/(b*x**m + 1)) | Timeout | 115 | integrate(sqrt(x^n*a/(x^m*b + 1)), x) | 1 | When both Sage and Sympy fail, Sage is quicker. |

| sqrt((a*x**2 + 1)/(b*x**2 + 1)) | Timeout | 110 | integrate(sqrt((a*x^2 + 1)/(b*x^2 + 1)), x) | 0 | When both Sage and Sympy fail, Sage is quicker. |

| sqrt((a*x**3 + 1)/(b*x**3 + 1)) | Timeout | 109 | integrate(sqrt((a*x^3 + 1)/(b*x^3 + 1)), x) | 0 | When both Sage and Sympy fail, Sage is quicker. |

| sqrt((a*x**n + 1)/(b*x**m + 1)) | Timeout | 114 | integrate(sqrt((x^n*a + 1)/(x^m*b + 1)), x) | 1 | When both Sage and Sympy fail, Sage is quicker. |

| sqrt((a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1)) | Timeout | 104 | integrate(sqrt((a*x^5 + x^3 + 1)/(b*x^5 + x^3 + 1)), x) | 0 | When both Sage and Sympy fail, Sage is quicker. |

| log(x) | x*log(x) – x | 0 | x*log(x) – x | 0 | |

| log(1/x) | -x*log(x) + x | 0 | -x*log(x) + x | 0 | |

| log(1/(x + 1)) | -x*log(x + 1) + x – log(x + 1) | 0 | -(x + 1)*log(x + 1) + x + 1 | 0 | |

| log(1/(x**2 + 1)) | -x*log(x**2 + 1) + 2*x – 2*I*log(x + I) + I*log(x**2 + 1) | 2 | -x*log(x^2 + 1) + 2*x – 2*arctan(x) | 0 | |

| log(1/(x**3 + 1)) | -x*log(x**3 + 1) + 3*x – 3*log(x + 1)/2 + sqrt(3)*I*log(x + 1)/2 + log(x**3 + 1)/2 – sqrt(3)*I*log(x**3 + 1)/2 + sqrt(3)*I*log(x – 1/2 – sqrt(3)*I/2) | 6 | -x*log(x^3 + 1) – sqrt(3)*arctan(1/3*(2*x – 1)*sqrt(3)) + 3*x – log(x + 1) + 1/2*log(x^2 – x + 1) | 0 | |

| log(x**(-n)/a) | -n*x*log(x) + n*x – x*log(a) | 0 | n*x + x*log(x^(-n)/a) | 0 | |

| log(1/(a*x**n + 1)) | Integral(log(1/(a*x**n + 1)), x) | 68 | n*x – n*integrate(1/(a*e^(n*log(x)) + 1), x) – x*log(x^n*a + 1) | 1 | When both Sage and Sympy fail, Sage is quicker. |

| log(1/(a*x**b + 1)) | Timeout | 91 | b*x – b*integrate(1/(a*e^(b*log(x)) + 1), x) – x*log(a*e^(b*log(x)) + 1) | 2 | When both Sage and Sympy fail, Sage is quicker. |

| log(a*x**2/(b*x**2 + 1)) | x*log(a) + 2*x*log(x) – x*log(b*x**2 + 1) + 2*I*log(x – I*sqrt(1/b))/(b*sqrt(1/b)) – I*log(b*x**2 + 1)/(b*sqrt(1/b)) | 10 | x*log(a*x^2/(b*x^2 + 1)) – 2*arctan(sqrt(b)*x)/sqrt(b) | 0 | |

| log(a*x**3/(b*x**3 + 1)) | -216*b**4*x**6*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 216*(-1)**(2/3)*b**4*x**6*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 72*(-1)**(1/6)*sqrt(3)*b**4*x**6*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 72*sqrt(3)*I*b**4*x**6*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 588*b**2*x**5*(1/b)**(2/3)*log(a)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 1764*b**2*x**5*(1/b)**(2/3)*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 588*b**2*x**5*(1/b)**(2/3)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 630*b**2*x**5*(1/b)**(2/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 420*(-1)**(5/6)*sqrt(3)*b**2*x**5*(1/b)**(2/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 210*sqrt(3)*I*b**2*x**5*(1/b)**(2/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 981*b**2*x**3*(1/b)**(4/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 981*(-1)**(2/3)*b**2*x**3*(1/b)**(4/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 327*(-1)**(1/6)*sqrt(3)*b**2*x**3*(1/b)**(4/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 327*sqrt(3)*I*b**2*x**3*(1/b)**(4/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 135*b**2*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 135*(-1)**(2/3)*b**2*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 45*(-1)**(1/6)*sqrt(3)*b**2*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 45*sqrt(3)*I*b**2*(1/b)**(7/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 147*(-1)**(2/3)*b*x**4*log(a)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 49*(-1)**(1/6)*sqrt(3)*b*x**4*log(a)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 49*(-1)**(5/6)*sqrt(3)*b*x**4*log(a)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 147*(-1)**(1/3)*b*x**4*log(a)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 882*(-1)**(2/3)*b*x**4*log(a)*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(1/6)*sqrt(3)*b*x**4*log(a)*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(5/6)*sqrt(3)*b*x**4*log(a)*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 882*(-1)**(1/3)*b*x**4*log(a)*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(1/3)*b*x**4*log(a)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 98*(-1)**(5/6)*sqrt(3)*b*x**4*log(a)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 98*(-1)**(1/6)*sqrt(3)*b*x**4*log(a)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(2/3)*b*x**4*log(a)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 588*(-1)**(2/3)*b*x**4*log(a)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 1323*(-1)**(2/3)*b*x**4*log(x)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 441*(-1)**(1/6)*sqrt(3)*b*x**4*log(x)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 441*(-1)**(5/6)*sqrt(3)*b*x**4*log(x)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 1323*(-1)**(1/3)*b*x**4*log(x)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 882*(-1)**(1/3)*b*x**4*log(x)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(5/6)*sqrt(3)*b*x**4*log(x)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(1/6)*sqrt(3)*b*x**4*log(x)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 882*(-1)**(2/3)*b*x**4*log(x)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 882*(-1)**(1/3)*b*x**4*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(5/6)*sqrt(3)*b*x**4*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(1/6)*sqrt(3)*b*x**4*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 882*(-1)**(2/3)*b*x**4*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(5/6)*sqrt(3)*b*x**4*log(x – (-1)**(1/3)*(1/b)**(1/3))/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 882*(-1)**(1/3)*b*x**4*log(x – (-1)**(1/3)*(1/b)**(1/3))/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 147*(-1)**(2/3)*b*x**4*log(b*x**3 + 1)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 49*(-1)**(1/6)*sqrt(3)*b*x**4*log(b*x**3 + 1)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 49*(-1)**(5/6)*sqrt(3)*b*x**4*log(b*x**3 + 1)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 147*(-1)**(1/3)*b*x**4*log(b*x**3 + 1)**2/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 588*(-1)**(2/3)*b*x**4*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(1/6)*sqrt(3)*b*x**4*log(x – (-1)**(1/3)*sqrt(3)*I*(1/b)**(1/3)/2 + (-1)**(1/3)*(1/b)**(1/3)/2)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 882*(-1)**(2/3)*b*x**4*log(x – (-1)**(1/3)*sqrt(3)*I*(1/b)**(1/3)/2 + (-1)**(1/3)*(1/b)**(1/3)/2)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(2/3)*b*x**4/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 98*(-1)**(1/6)*sqrt(3)*b*x**4/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 98*(-1)**(5/6)*sqrt(3)*b*x**4/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(1/3)*b*x**4/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 588*b*x**2*(1/b)**(2/3)*log(a)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 1764*b*x**2*(1/b)**(2/3)*log(x)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 588*b*x**2*(1/b)**(2/3)*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 945*b*x**2*(1/b)**(2/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 630*(-1)**(5/6)*sqrt(3)*b*x**2*(1/b)**(2/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 315*sqrt(3)*I*b*x**2*(1/b)**(2/3)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 98*(-1)**(1/6)*sqrt(3)*x*log(a)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 98*(-1)**(5/6)*sqrt(3)*x*log(a)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(2/3)*x*log(a)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(1/3)*x*log(a)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 294*(-1)**(5/6)*sqrt(3)*x*log(x – (-1)**(1/3)*(1/b)**(1/3))/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 882*(-1)**(1/3)*x*log(x – (-1)**(1/3)*(1/b)**(1/3))/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(1/3)*x*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(2/3)*x*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 98*(-1)**(5/6)*sqrt(3)*x*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 98*(-1)**(1/6)*sqrt(3)*x*log(b*x**3 + 1)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) – 294*(-1)**(1/6)*sqrt(3)*x*log(x – (-1)**(1/3)*sqrt(3)*I*(1/b)**(1/3)/2 + (-1)**(1/3)*(1/b)**(1/3)/2)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) + 882*(-1)**(2/3)*x*log(x – (-1)**(1/3)*sqrt(3)*I*(1/b)**(1/3)/2 + (-1)**(1/3)*(1/b)**(1/3)/2)/(588*b**2*x**4*(1/b)**(2/3) + 588*b*x*(1/b)**(2/3)) | 26 | x*log(a*x^3/(b*x^3 + 1)) – 1/2*(2*sqrt(3)*a*arctan(1/3*(2*b^(2/3)*x – b^(1/3))*sqrt(3)/b^(1/3))/b^(1/3) – a*log(b^(2/3)*x^2 – b^(1/3)*x + 1)/b^(1/3) + 2*a*log((b^(1/3)*x + 1)/b^(1/3))/b^(1/3))/a | 0 | Sage simplifies better. |

| log(a*x**n/(b*x**m + 1)) | Timeout | 96 | (m – n + log(a))*x – m*integrate(1/(b*e^(m*log(x)) + 1), x) – x*log(x^m*b + 1) + x*log(x^n) | 2 | When both Sage and Sympy fail, Sage is quicker. |

| log((a*x**2 + 1)/(b*x**2 + 1)) | Integral(log((a*x**2 + 1)/(b*x**2 + 1)), x) | 72 | x*log((a*x^2 + 1)/(b*x^2 + 1)) + 2*arctan(sqrt(a)*x)/sqrt(a) – 2*arctan(sqrt(b)*x)/sqrt(b) | 0 | Sage asks whether `a` and `b` are positive and then returns an answer. Sympy fails irrespective of the assumptions. |

| log((a*x**3 + 1)/(b*x**3 + 1)) | Timeout | 89 | x*log((a*x^3 + 1)/(b*x^3 + 1)) + sqrt(3)*arctan(1/3*(2*a^(2/3)*x – a^(1/3))*sqrt(3)/a^(1/3))/a^(1/3) – sqrt(3)*arctan(1/3*(2*b^(2/3)*x – b^(1/3))*sqrt(3)/b^(1/3))/b^(1/3) – 1/2*log(a^(2/3)*x^2 – a^(1/3)*x + 1)/a^(1/3) + log((a^(1/3)*x + 1)/a^(1/3))/a^(1/3) + 1/2*log(b^(2/3)*x^2 – b^(1/3)*x + 1)/b^(1/3) – log((b^(1/3)*x + 1)/b^(1/3))/b^(1/3) | 0 | Sage asks whether `a` and `b` are positive and then returns an answer. Sympy fails irrespective of the assumptions. |

| log((a*x**n + 1)/(b*x**m + 1)) | Timeout | 89 | (m – n)*x – m*integrate(1/(b*e^(m*log(x)) + 1), x) + n*integrate(1/(x^n*a + 1), x) – x*log(x^m*b + 1) + x*log(x^n*a + 1) | 4 | When both Sage and Sympy fail, Sage is quicker. |

| log((a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1)) | Integral(log((a*x**5 + x**3 + 1)/(b*x**5 + x**3 + 1)), x) | 42 | -x*log(b*x^5 + x^3 + 1) + x*log(a*x^5 + x^3 + 1) – integrate((2*x^3 + 5)/(b*x^5 + x^3 + 1), x) + integrate((2*x^3 + 5)/(a*x^5 + x^3 + 1), x) | 1 | When both Sage and Sympy fail, Sage is quicker. |

| sin(x) | -cos(x) | 0 | -cos(x) | 0 | |

| sin(x)**n*cos(x)**m | Timeout | 102 | No result | 110 | Disgraceful failure by Sage. |

| sin(a*x)**n*cos(b*x)**m | Timeout | 81 | No result | 112 | Disgraceful failure by Sage. |

| 1/sin(x) | log(cos(x) – 1)/2 – log(cos(x) + 1)/2 | 0 | 1/2*log(cos(x) – 1) – 1/2*log(cos(x) + 1) | 0 | |

| 1/(sin(x) + 1) | -2/(tan(x/2) + 1) | 1 | -2/(sin(x)/(cos(x) + 1) + 1) | 0 | |

| 1/(sin(x)**2 + 1) | Timeout | 96 | 1/2*sqrt(2)*arctan(sqrt(2)*tan(x)) | 0 | Sage simply beats Sympy. |

| 1/(sin(x)**3 + 1) | Timeout | 87 | Maxima: `quotient’ by `zero’ | 78 | Disgraceful failure by Sage. |

| sin(x)**(-n)/a | Integral(sin(x)**(-n)/a, x) | 36 | No result | 227 | Disgraceful failure by Sage. |

| 1/(a*sin(x)**n + 1) | Timeout | 98 | Maxima: expt: undefined: 0 to a negative exponent. | 1 | Disgraceful failure by Sage. |

| 1/(a*sin(x)**b + 1) | Timeout | 83 | No result | 140 | Disgraceful failure by Sage. |

| a*sin(x)**2/(b*sin(x)**2 + 1) | Timeout | 93 | (x/b – arctan(sqrt(b + 1)*tan(x))/(sqrt(b + 1)*b))*a | 0 | Sage simply beats Sympy. |

| a*sin(x)**3/(b*sin(x)**3 + 1) | Timeout | 82 | No result | 568 | Disgraceful failure by Sage. |

| a*sin(x)**n/(b*sin(x)**m + 1) | Integral(a*sin(x)**n/(b*sin(x)**m + 1), x) | 24 | Manual Interupt | 1527 | Both Sage and Sympy fail, however Sympy is quicker. |

| (a*sin(x)**2 + 1)/(b*sin(x)**2 + 1) | Timeout | 98 | a*x/b – (a – b)*arctan(sqrt(b + 1)*tan(x))/(sqrt(b + 1)*b) | 0 | Sage simply beats Sympy. |

| (a*sin(x)**3 + 1)/(b*sin(x)**3 + 1) | Timeout | 96 | Manual Interupt | 203 | |

| (a*sin(x)**n + 1)/(b*sin(x)**m + 1) | Timeout | 83 | Maxima: expt: undefined: 0 to a negative exponent. | 1 | Disgraceful failure by Sage. |

| (a*sin(x)**5 + sin(x)**3 + 1)/(b*sin(x)**5 + sin(x)**3 + 1) | Timeout | 89 | Manual Interupt | 142 | |